December 13, 2022

By: AEOP Membership Council Members Iishaan Inabathini and Samina Mondal

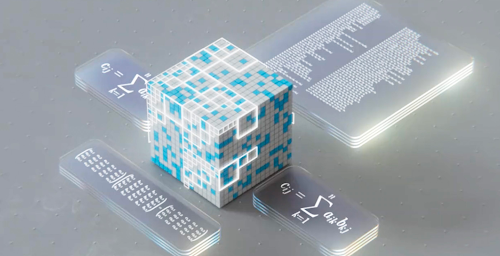

Deepmind, a company known for its breakthroughs in artificial intelligence, recently released its findings on the creation of AlphaTesnor, a deep reinforcement learning method for finding “novel, efficient, and provably correct” algorithms for matrix multiplication.The algorithms found by AlphaTensor for large matrices were 10-20% faster than commonly used matrix multiplication algorithms on the same hardware.

The repeated use of matrix multiplication in real applications makes the efficiency of the algorithms incredibly important. In machine learning, such as neural networks like AlphaTensor, many of the calculations are matrix/tensor multiplications. The need for efficient algorithms for matrix multiplication also goes beyond machine learning. Matrix multiplication is an essential part of 2D and 3D graphics, digital communications, and scientific computing, to name a few.

Deepmind’s publication gained instant fame in the communities of machine learning and computer science after appearing in the renowned science journal Nature. Many are already looking to reestablish how they go about matrix multiplication.

AlphaTensor was built from AlphaZero, a generalized deep reinforcement learning model aimed at beating the games of shogi, chess and go. The team converted the process of finding matrix multiplication algorithms into a single-player game. In the game, the playing board is a 3-dimensional tensor that evaluates whether the algorithm is correct. Once a correct algorithm is found, its efficiency is evaluated by the number of steps required to reach the matrix product.

The number of possible moves for each step of the game is 30 orders of magnitude larger than the game of go (AlphaGo, another specialized variant of AlphaZero that famously beat the world champion of go). The scale of the algorithm space that AlphaTensor explores is not within reach of what is possible by humans, and AlphaTensor finds up to thousands of algorithms for matrix multiplications for each size. It was also found that AlphaTensor rediscovered the commonly used matrix multiplication algorithms from scratch.

This use of machine learning, building off a model that was used to solve board games to accelerate an algorithm that is essential to much of the heavy computing done today, shows the flexibility of machine learning. Machine learning is now being applied across many sciences and the field grows at a rapid pace. AlphaTensor helps advance our understanding of the vastness of matrix multiplication and makes many important computations more efficient, but it also shows how much possibility exists in the field of machine learning. To create a new approach, all it takes is creativity.

References:

- Discovering novel algorithms with Alphatensor. Deepmind. (n.d.). Retrieved November 15, 2022, from https://www.deepmind.com/blog/discovering-novel-algorithms-with-alphatensor

- Fawzi, A., Balog, M., Huang, A., Hubert, T., Romera-Paredes, B., Barekatain, M., Novikov, A., R. Ruiz, F. J., Schrittwieser, J., Swirszcz, G., Silver, D., Hassabis, D., & Kohli, P. (2022, October 5). Discovering faster matrix multiplication algorithms with reinforcement learning. Nature News. Retrieved November 15, 2022, from https://www.nature.com/articles/s41586-022-05172-4

- Gao. (n.d.). Matrices in computer Graphics|Gao’s blog. Gao’s Blog. Retrieved November 15, 2022, from https://vitaminac.github.io/Matrices-in-Computer-Graphics/

Find a Volunteering Opportunity

Visit our Program Volunteers page for a tool to find the best opportunity for you.